To get a better look at the world around them, animals constantly are in motion. Primates and people use complex eye movements to focus their vision (as humans do when reading, for instance); birds, insects, and rodents do the same by moving their heads, and can even estimate distances that way. Yet how these movements play out in the elaborate circuitry of neurons that the brain uses to “see” is largely unknown. And it could be a potential problem area as scientists create artificial neural networks that mimic how vision works in self-driving cars.

To better understand the relationship between movement and vision, a team of Harvard researchers looked at what happens in one of the brain’s primary regions for analyzing imagery when animals are free to roam naturally. The results of the study, published Tuesday in the journal Neuron, suggest that image-processing circuits in the primary visual cortex not only are more active when animals move, but that they receive signals from a movement-controlling region of the brain that is independent from the region that processes what the animal is looking at. In fact, the researchers describe two sets of movement-related patterns in the visual cortex that are based on head motion and whether an animal is in the light or the dark.

The movement-related findings were unexpected, since vision tends to be thought of as a feed-forward computation system in which visual information enters through the retina and travels on neural circuits that operate on a one-way path, processing the information piece by piece. What the researchers saw here is more evidence that the visual system has many more feedback components where information can travel in opposite directions than had been thought.

These results offer a nuanced glimpse into how neural activity works in a sensory region of the brain, and add to a growing body of research that is rewriting the textbook model of vision in the brain.

“It was really surprising to see this type of [movement-related] information in the visual cortex because traditionally people have thought of the visual cortex as something that only processes images,” said Grigori Guitchounts, a postdoctoral researcher in the Neurobiology Department at Harvard Medical School and the study’s lead author. “It was mysterious, at first, why this sensory region would have this representation of the specific types of movements the animal was making.”

While the scientists weren’t able to definitively say why this happens, they believe it has to do with how the brain perceives what’s around it.

“The model explanation for this is that the brain somehow needs to coordinate perception and action,” Guitchounts said. “You need to know when a sensory input is caused by your own action as opposed to when it’s caused by something out there in the world.”

For the study, Guitchounts teamed up with former Department of Molecular and Cellular Biology Professor David Cox, alumnus Javier Masis, M.A. ’15, Ph.D. ’18, and postdoctoral researcher Steffen B.E. Wolff. The work started in 2017 and wrapped up in 2019 while Guitchounts was a graduate researcher in Cox’s lab. A preprint version of the paper published in January.

The typical setup of past experiments on vision worked like this: Animals, like mice or monkeys, were sedated, restrained so their heads were in fixed positions, and then given visual stimuli, like photographs, so researchers could see which neurons in the brain reacted. The approach was pioneered by Harvard scientists David H. Hubel and Torsten N. Wiesel in the 1960s, and in 1981 they won a Nobel Prize in medicine for their efforts. Many experiments since then have followed their model, but it did not illuminate how movement affects the neurons that analyze.

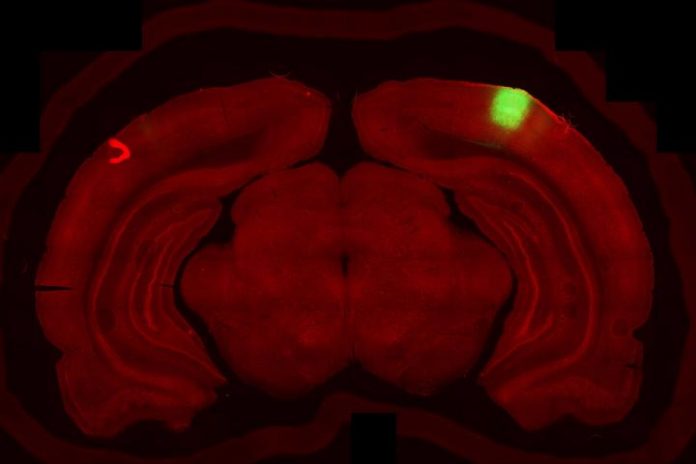

Researchers in this latest experiment wanted to explore that, so they watched 10 rats going about their days and nights. The scientists placed each rat in an enclosure, which doubled as its home, and continuously recorded their head movements. Using implanted electrodes, they measured the brain activity in the primary visual cortex as the rats moved.

Half of the recordings were taken with the lights on. The other half were recorded in total darkness. The researchers wanted to compare what the visual cortex was doing when there was visual input versus when there wasn’t. To be sure the room was pitch black, they taped shut any crevice that could let in light, since rats have notoriously good vision at night.

The data showed that on average, neurons in the rats’ visual cortices were more active when the animals moved than when they rested, even in the dark. That caught the researchers off guard: In a pitch-black room, there is no visual data to process. This meant that the activity was coming from the motor cortex, not an external image.

The team also noticed that the neural patterns in the visual cortex that were firing during movement differed in the dark and light, meaning they weren’t directly connected. Some neurons that were ready to activate in the dark were in a kind of sleep mode in the light.

Using a machine-learning algorithm, the researchers encoded both patterns. That let them not only tell which way a rat was moving its head by just looking at the neural activity in its visual cortex, but also predict the movement several hundred milliseconds before the rat made it.

The researchers confirmed that the movement signals came from the motor area of the brain by focusing on the secondary motor cortex. They surgically destroyed it in several rats, then ran the experiments again. The rats in which this area of the brain was lesioned no longer gave off signals in the visual cortex. However, the researchers were not able to determine if the signal originates in the secondary motor cortex. It could be only where it passes through, they said.

Furthermore, the scientists pointed out some limitations in their findings. For instance, they only measured the movement of the head, and did not measure eye movement. The study is also based on rodents, which are nocturnal. Their visual systems share similarities with humans and primates, but differ in complexity. Still, the paper adds to new lines of research and the findings could potentially be applied to neural networks that control machine vision, like those in autonomous vehicles.

“It’s all to better understand how vision actually works,” Guitchounts said. “Neuroscience is entering into a new era where we understand that perception and action are intertwined loops. … There’s no action without perception and no perception without action. We have the technology now to measure this.”